Azure blob storage

PremiumThis feature is available on our Premium and Enterprise plans.Send Customer.io data about messages, people, metrics, etc to Microsoft Azure Blob Storage. From here, you can ingest your data into the data warehouse of your choosing. This integration exports files up to every 15 minutes, helping you keep up to date on your audience’s message activities.

How it works

This integration exports individual parquet files for Deliveries, Metrics, Subjects, Outputs, Content, People, and Attributes to your storage bucket. Each parquet file contains data that changed since the last export.

Once the parquet files are in your storage bucket, you can import them into data platforms like Fivetran or data warehouses like Redshift, BigQuery, and Snowflake.

Note that this integration only publishes parquet files to your storage bucket. You must set your data warehouse to ingest this data. There are many approaches to ingesting data, but it typically requires a COPY command to load the parquet files from your bucket. After you load parquet files, you should set them to expire to delete them automatically.

We attempt to export parquet files every 15 minutes, though actual sync intervals and processing times may vary. When syncing large data sets, or Customer.io experiences a high volume of concurrent sync operations, it can take up to several hours to process and export data. This feature is not intended to sync data in real time.

before next sync end

Your initial sync includes historical data

During the first sync, you’ll receive a history of your Deliveries, Metrics, Subjects, and Outputs data. However, People who have been deleted or suppressed before the first sync are not included in the People file export and the historical data in the other export files is anonymized for the deleted and suppressed People.

The initial export vs incremental exports

Your initial sync is a set of files containing historical data to represent your workspace’s current state. Subsequent sync files contain changesets.

- Metrics: The initial metrics sync is broken up into files with two sequence numbers, as follows.

<name>_v4_<workspace_id>_<sequence1>_<sequence2>. - Attributes: The initial Attributes sync includes a list of profiles and their current attributes. Subsequent files will only contain attribute changes, with one change per row.

already enabled?} c-->|yes|d[send changes since last sync] c-->|no|e{was the file

ever enabled?} e-->|yes|f[send changeset since

file was disabled] e-->|no|g[send all history]

For example, let’s say you’ve enabled the Attributes export. We will attempt to sync your data to your storage bucket every 15 minutes:

- 12:00pm We sync your Attributes Schema for the first time. This includes a list of profiles and their current attributes.

- 12:05pm User1’s email is updated to company-email@example.com.

- 12:10pm User1’s email is updated to personal-email@example.com.

- 12:15 We sync your data again. In this export, you would only see attribute changes, with one change per row. User1 would have one row dedicated to his email changing.

Requirements

If you use a firewall or an allowlist, you must allow the following IP addresses to support traffic from Customer.io.

Make sure you use the correct IP addresses for your account region.

| US Region | EU Region |

|---|---|

| 34.71.192.245 | 34.76.81.2 |

| 35.184.88.76 | 35.187.55.80 |

| 34.72.101.57 | 104.199.99.65 |

| 34.123.199.33 | 34.77.146.181 |

| 34.67.167.190 | 34.140.234.108 |

| 35.240.84.170 |

Do you use other Customer.io features?

These IP addresses are specific to outgoing Data Warehouse integrations. If you use your own SMTP server or receive webhooks, you may also need to allow additional addresses. See our complete IP allowlist.

Set up an Azure Blob Storage integration

As a part of this process, you’ll create an Access policy and a Shared Access Signature (SAS) URL. The Shared Access Signature grants Customer.io access to your Azure blob container, but typically has a limited expiration date. Before you generate a SAS URL, you’ll create the Access policy (with read, write, add, create, and list permissions) that lets you set a longer expiration date for your SAS URL and provides a way to revoke the token later, if you decide to shut off this integration for any reason.

Login to your Azure account, go to Storage browser, and select Blob Containers.

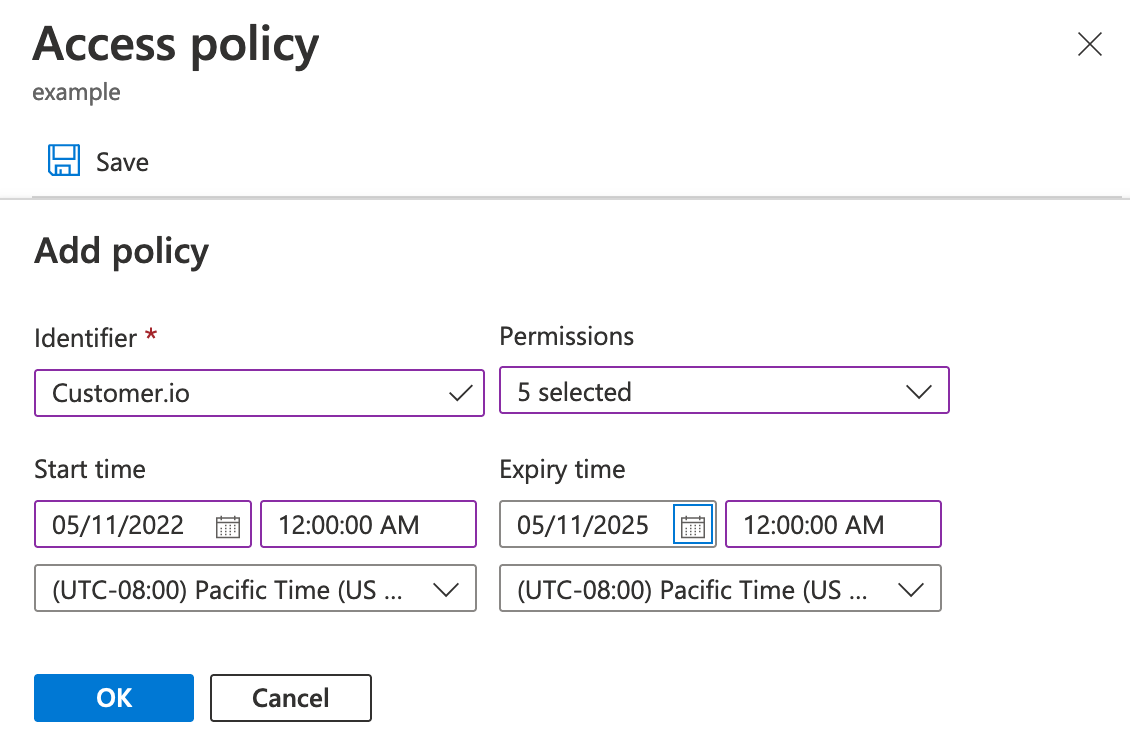

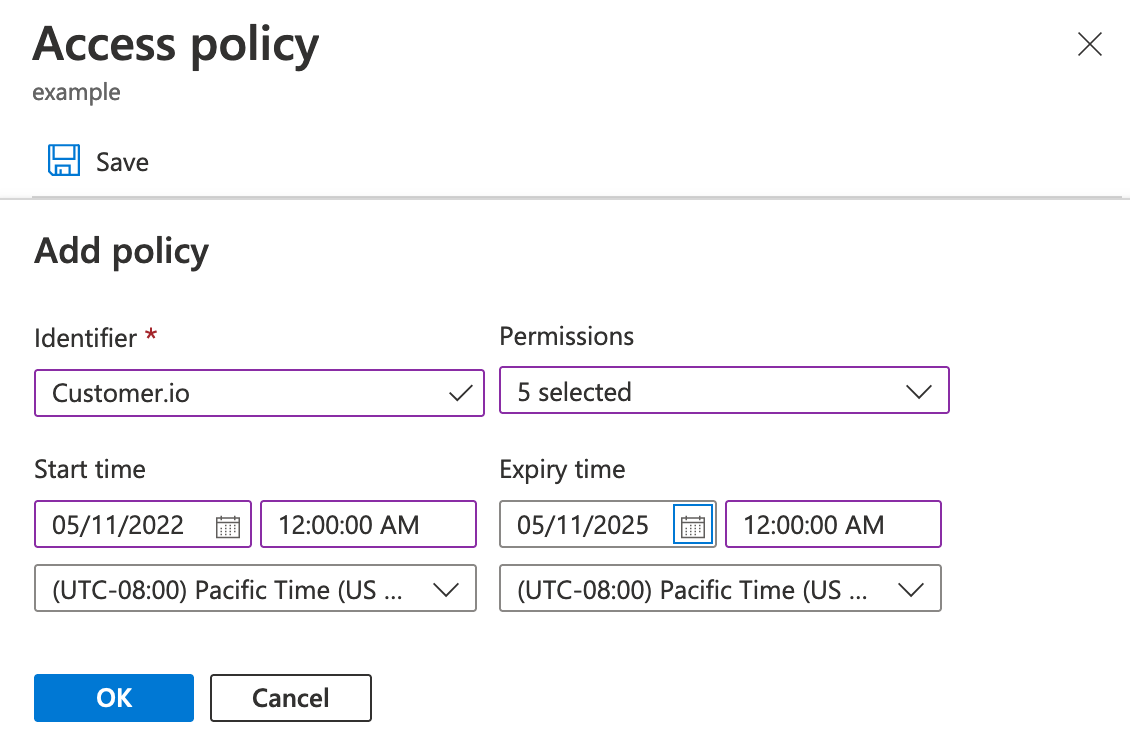

Right click the container you want to export Customer.io data to, and select Access policy to create a policy allowing you to create a SAS URL with a long expiry date.

- Click Add policy and set an Identifier for the policy. This is just the name of the access policy that you’ll use in later steps.

- Click Permissions and select read, write, add, create, and list.

- Set the Start time to the current date.

- Set the Expiry time to a date well into the future.

- Click OK and then click Save.

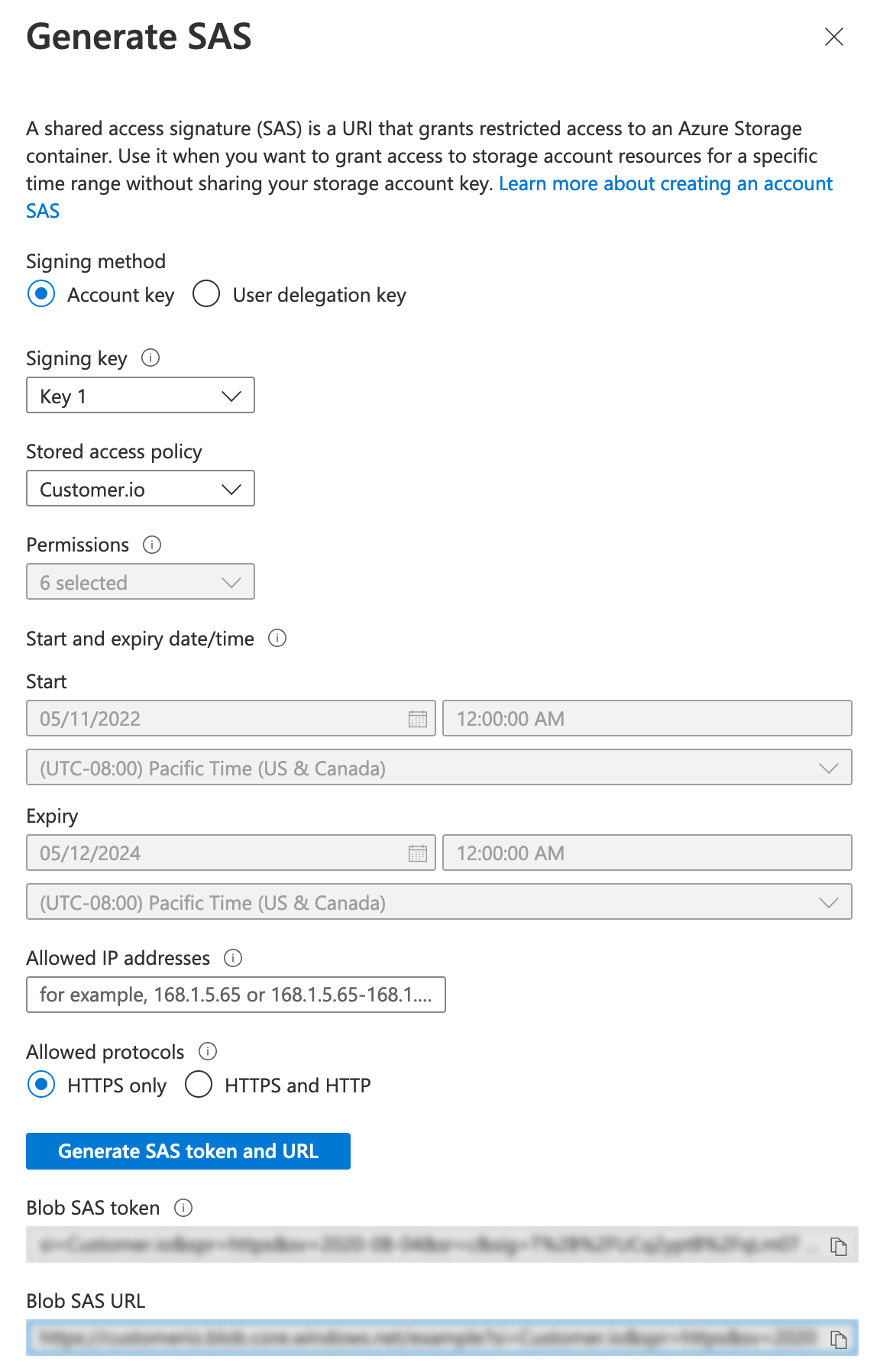

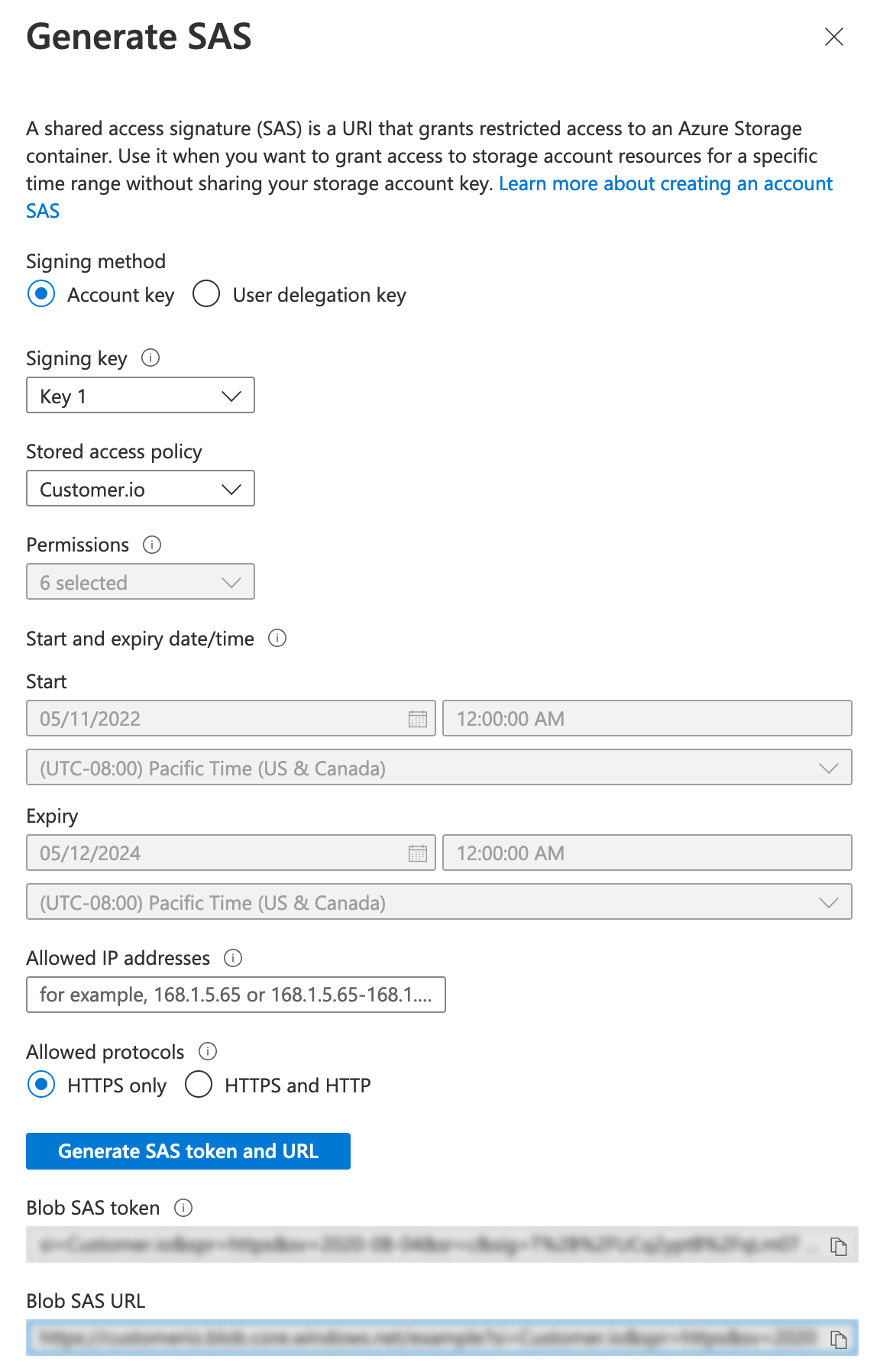

Right click the container again and select Generate SAS to generate the URL that Customer.io will use to access your Azure bucket.

- Select the Stored access policy you created in previous steps.

- (Optional) List Customer.io’s IP addresses under Allowed IP addresses to provide an extra layer of security for your SAS URL.

- Click Generate SAS token and URL and copy the URL. When you close the dialog, you won’t be able to access the token or URL again, so make sure that you copy the URL. You’ll need it in later steps.

Go to Customer.io and select Data & Integrations > Integrations > Azure Blob Storage.

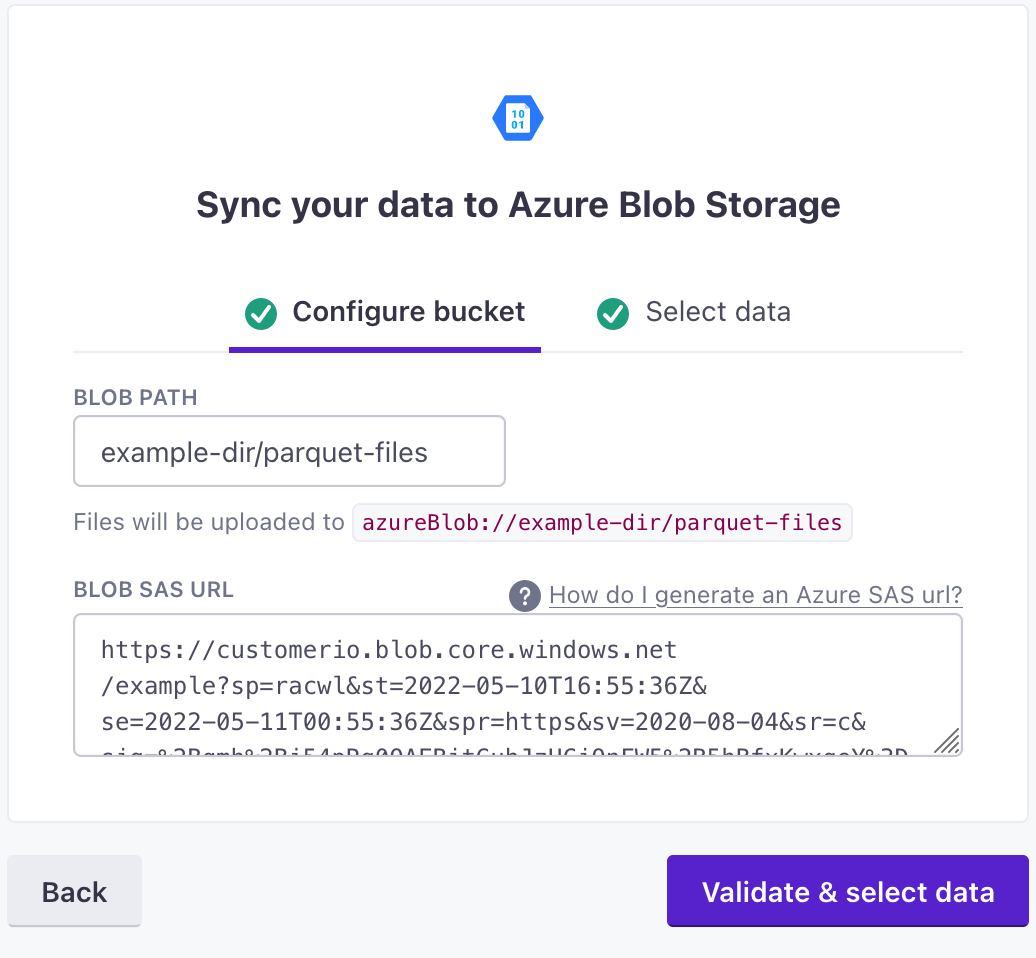

Click Sync your Azure Blob Storage bucket.

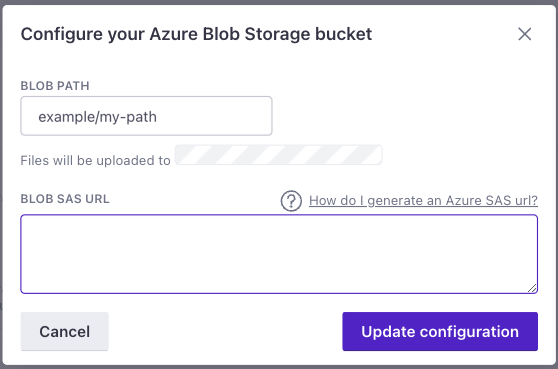

Enter the Blob Path: this is the directory in the blob where you want to deposit parquet files with each sync. If you don’t provide a path, we’ll deposit files in the root of the blob. If the path doesn’t already exist, clicking “Validate & Select Data” will create a new blob storage path.

Paste your Blob SAS URL in the appropriate box and click Validate & select data.

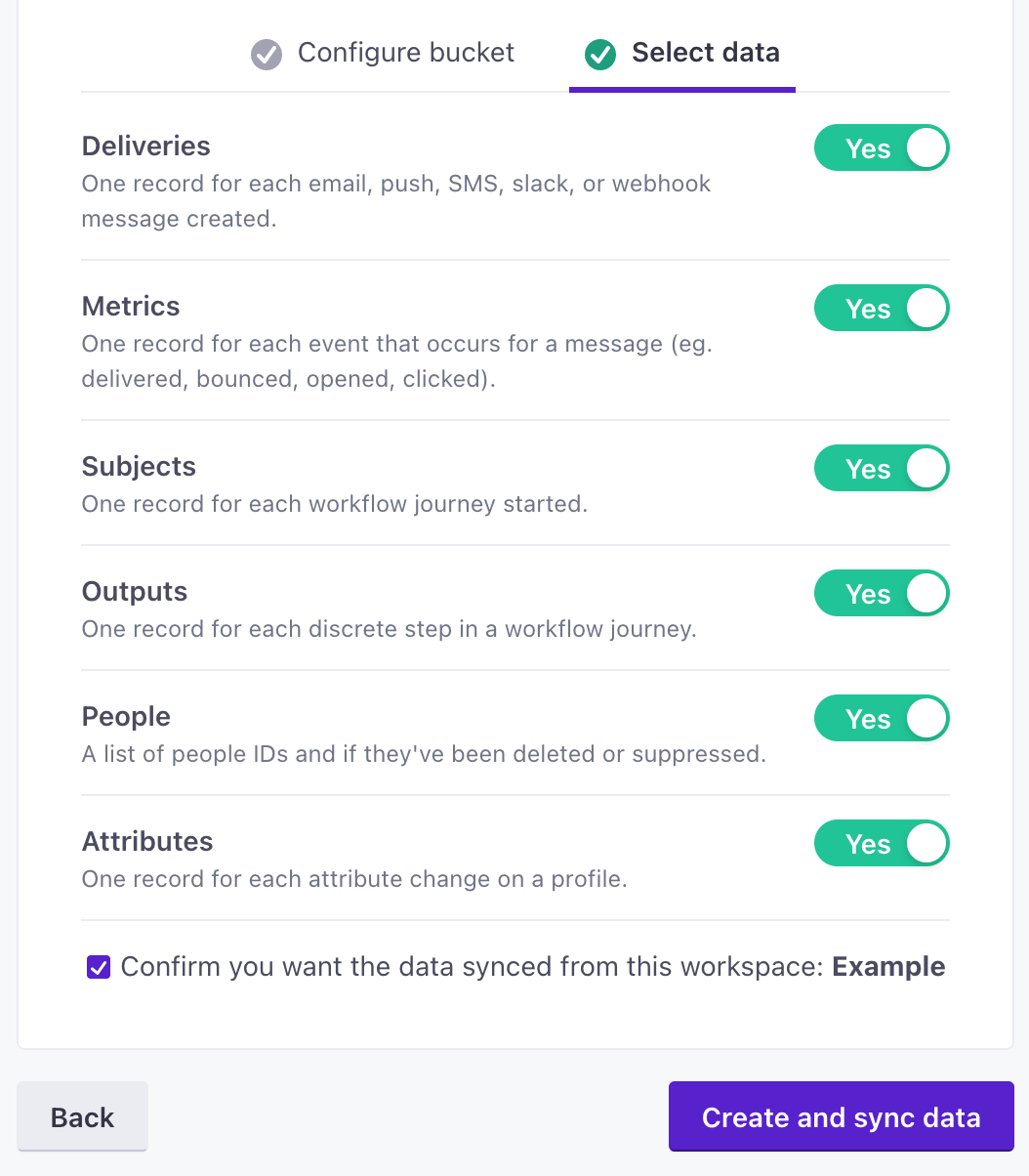

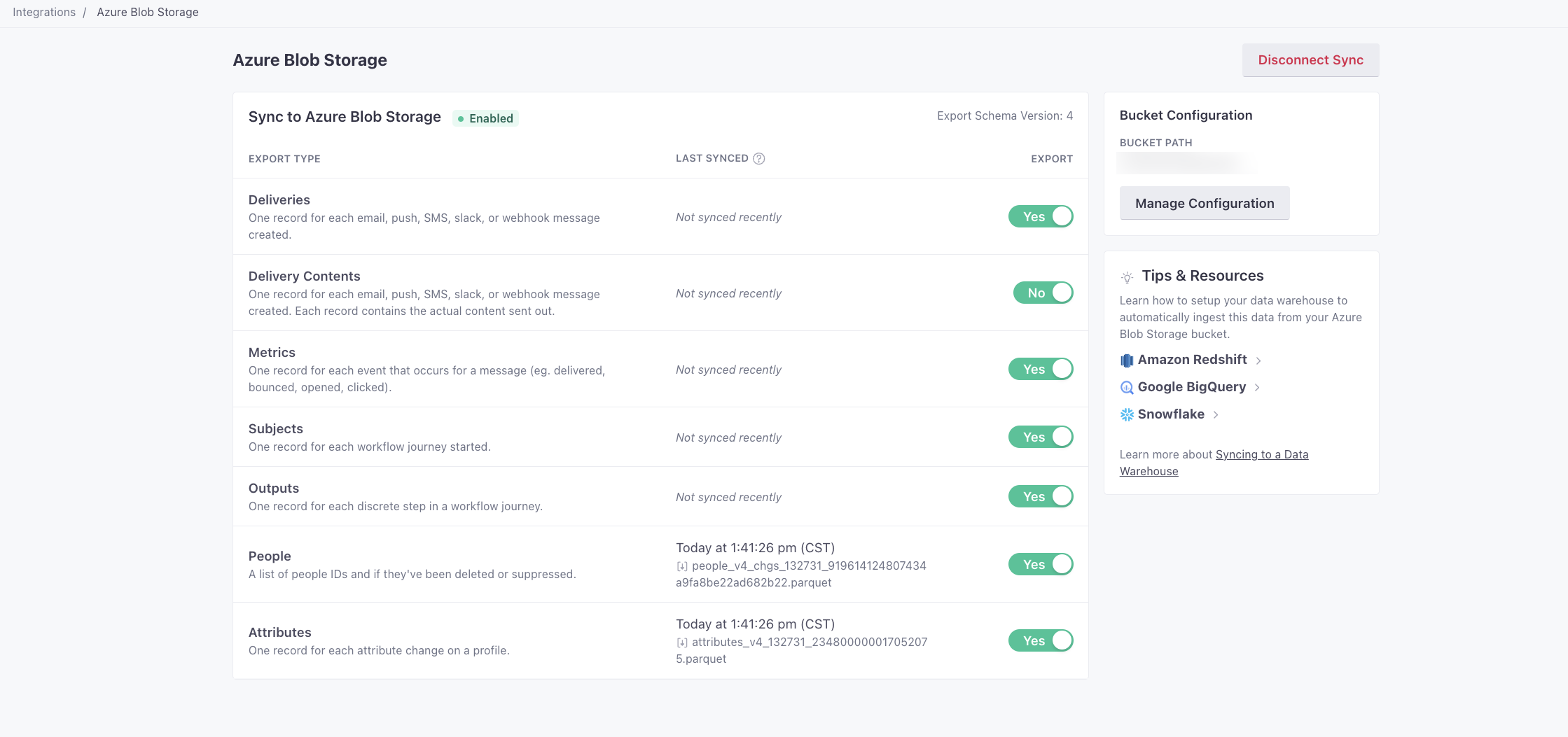

Select the data types that you want to export from Customer.io to your bucket. By default, we export all data types, but you can disable the types that you aren’t interested in.

Click Create and sync data.

Pausing and resuming your sync

You can turn off files you no longer want to receive, or pause them momentarily as you update your integration, and turn them back on. When you turn a file schema on, we send files to catch you up from the last export.If you haven’t exported a particular file before—the file was never “on”—the initial sync contains your historical data.

You can also disable your entire sync, in which case we’ll quit sending files all together. When you enable your sync again, we send all of your historical data as if you’re starting a new integration. Before you disable a sync, consider if you simply want to disable individual files and resume them later.

Delete old sync files before you re-enable a sync

Before you resume a sync that you previously disabled, you should clear any old files from your storage bucket so that there’s no confusion between your old files and the files we send with the re-enabled sync.

Disabling and enabling individual export files

- Go to Data & Integrations > Integrations and select Azure Blob Storage.

- Select the files you want to turn on or off.

When you enable a file, the next sync will contain baseline historical data catching up from your previous sync or the complete history if you haven’t synced a file before; subsequent syncs will contain changesets.

Turning the People file off

If you turn the People file off for more than 7 days, you will not be able to re-enable it. You’ll need to delete your sync configuration, purge all sync files from your destination storage bucket, and create a new sync to resume syncing people data.

Disabling your sync

If your sync is already disabled, you can enable it again with these instructions. But, before you re-enable your sync, you should clear the previous sync files from your data warehouse bucket first. See Pausing and resuming your sync for more information.

- Go to Data & Integrations > Integrations and select Azure Blob Storage.

- Click Disable Sync.

Manage your configuration

You can change settings for a bucket, if your path changes or you need to swap keys for security purposes.

- Go to Data & Integrations > Integrations and select Azure Blob Storage.

- Click Manage Configuration for your bucket.

- Make your changes. No matter your changes, you must input your Blob SAS URL.

- Click Update Configuration. Subsequent syncs will use your new configuration.

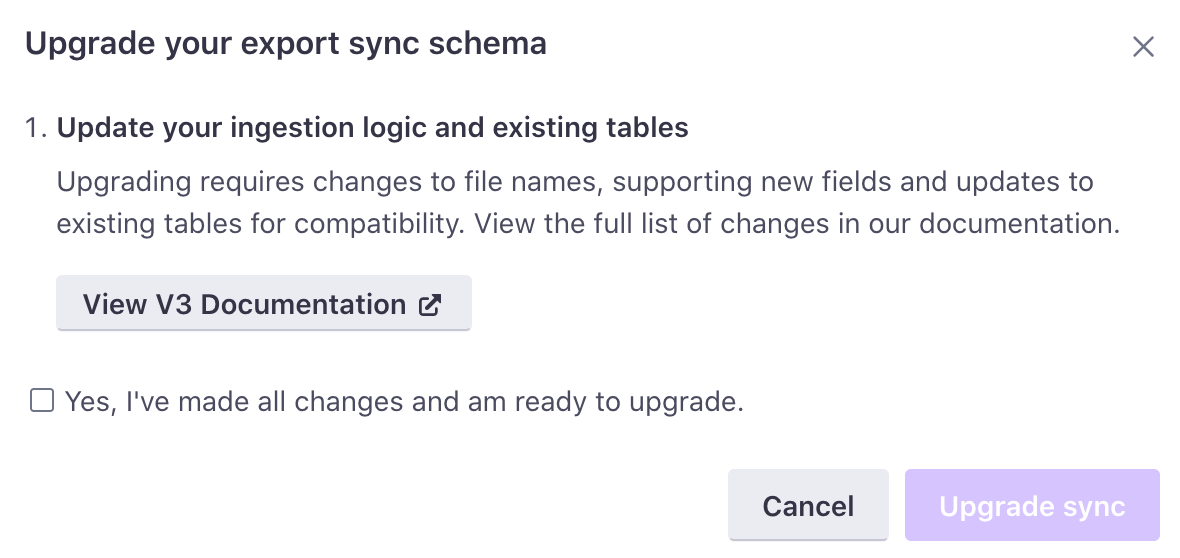

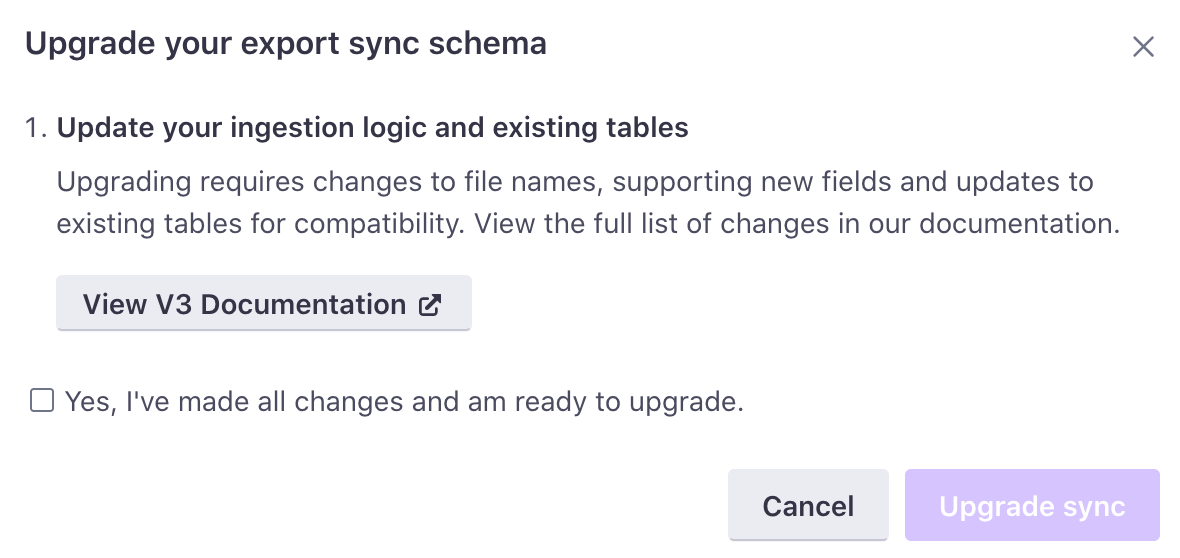

Update sync schema version

Before you prepare to update your data warehouse sync version, see the changelog. You’ll need to update schemas to upgrade to the latest version (v4).

When updating from v1 to a later version, you must:

- Update ingestion logic to accept the new file name format:

<name>_v<x>_<workspace_id>_<sequence>.parquet - Delete existing rows in your Subjects and Outputs tables. When you update, we send all of your Subjects and Outputs data from the beginning of your history using the new file schema.

- Go to Data & Integrations > Integrations and select Azure Blob Storage.

- Click Upgrade Schema Version.

- Follow the instructions to make sure that your ingestion logic is updated accordingly.

- Confirm that you’ve made the appropriate pages and click Upgrade sync. The next sync uses the updated schema version.

Parquet file schemas

This section describes the different kinds of files you can export from our Database-out integrations. Many schemas include an internal_customer_id—this is the cio_idAn identifier for a person that is automatically generated by Customer.io and cannot be changed. This identifier provides a complete, unbroken record of a person across changes to their other identifiers (id, email, etc).. You can use it to resolve a person associated with a subject, delivery, etc.

These schemas represent the latest versions available. Check out our changelog for information about earlier versions.

Deliveries are individual email, in-app, push, SMS, slack, and webhook records sent from your workspace. The first deliveries export file includes baseline historical data. Subsequent files contain rows for data that changed since the last export.

| Field Name | Primary Key | Foreign Key | Description |

|---|---|---|---|

| workspace_id | INTEGER (Required). The ID of the Customer.io workspace associated with the delivery record. | ||

| delivery_id | ✅ | STRING (Required). The ID of the delivery record. | |

| internal_customer_id | People | STRING (Nullable). The cio_id of the person in question. Use the people parquet file to resolve this ID to an external customer_id or email address. | |

| subject_id | Subjects | STRING (Nullable). If the delivery was created as part of a Campaign or API Triggered Broadcast workflow, this is the ID for the path the person went through in the workflow. Note: This value refers to, and is the same as, the subject_name in the subjects table. | |

| event_id | Subjects | STRING (Nullable). If the delivery was created as part of an event-triggered Campaign, this is the ID for the unique event that triggered the workflow. Note that this is a foreign key for the subjects table, and not the metrics table. | |

| delivery_type | STRING (Required). The type of delivery: email, push, in-app, sms, slack, or webhook. | ||

| campaign_id | INTEGER (Nullable). If the delivery was created as part of a Campaign or API Triggered Broadcast workflow, this is the ID for the Campaign or API Triggered Broadcast. | ||

| action_id | INTEGER (Nullable). If the delivery was created as part of a Campaign or API Triggered Broadcast workflow, this is the ID for the unique workflow item that caused the delivery to be created. | ||

| newsletter_id | INTEGER (Nullable). If the delivery was created as part of a Newsletter, this is the unique ID of that Newsletter. | ||

| content_id | INTEGER (Nullable). If the delivery was created as part of a Newsletter split test, this is the unique ID of the Newsletter variant. | ||

| trigger_id | INTEGER (Nullable). If the delivery was created as part of an API Triggered Broadcast, this is the unique trigger ID associated with the API call that triggered the broadcast. | ||

| created_at | TIMESTAMP (Required). The timestamp the delivery was created at. | ||

| transactional_message_id | INTEGER (Nullable). If the delivery occurred as a part of a transactional message, this is the unique identifier for the API call that triggered the message. | ||

| seq_num | INTEGER (Required) A monotonically increasing number indicating relative recency for each record: the larger the number, the more recent the record. |

The delivery_content schema represents message contents; each row corresponds to an individual delivery. Use the delivery_id to find more information about the contents of a message, or the recipient to find information about the person who received the message.

If your delivery was produced from a campaign, it’ll include campaign and action IDs, and the newsletter and content IDs will be null. If your delivery came from a newsletter, the row will include newsletter and content IDs, and the campaign and action IDs will be null.

Delivery content might lag behind other tables by 15-30 minutes (or roughly 1 sync operation). We package delivery contents on a 15 minute interval, and can export to your data warehouse up to every 15 minutes. If these operations don’t line up, we might occasionally export delivery_content after other tables.

Delivery content can be a very large data set

Workspaces that have sent many messages may have hundreds or thousands of GB of data.

Delivery content is available in v4 or later

The delivery_content schema is new in v4. You need to update your data warehouse schemas to take advantage of the update and see Delivery Content, Subjects, and Outputs.

| Field Name | Primary Key | Foreign Key | Description |

|---|---|---|---|

| delivery_id | ✅ | Deliveries | STRING (Required). The ID of that delivery associated with the message content. |

| workspace_id | INTEGER (Required). The ID of the Customer.io workspace associated with the output record. | ||

| type | STRING (Required). The delivery type—one of email, sms, push, in-app, or webhook. | ||

| campaign_id | INTEGER (Nullable). The ID for the campaign that produced the content (if applicable). | ||

| action_id | INTEGER (Nullable). The ID for the campaign workflow item that produced the content. | newsletter_id | INTEGER (Nullable). The ID for the newsletter that produced the content. | content_id | INTEGER (Nullable). The ID for the Newsletter A/B test item that produced the content, 0 indexed. If your Newsletter did not include an A/B test, this value is 0. | from | STRING (Nullable). The from address for an email, if the content represents an email. | reply_to | STRING (Nullable). The Reply To address for an email, if the content is related to an email. |

| bcc | STRING (Nullable). The Blind Carbon Copy (BCC) address for an email, if the content is related to an email. | ||

| recipient | STRING (Required). The person who received the message, dependent on the type. For an email, this is an email address; for an SMS, it's a phone number; for a push notification, it's a device ID. | ||

| subject | STRING (Nullable). The subject line of the message, if applicable; required if the message is an email | body | STRING (Required). The body of the message, including all HTML markup for an email. |

| body_amp | STRING (Nullable). The HTML body of an email including any AMP-enabled JavaScript included in the message. | ||

| body_plain | STRING (Required). The plain text of your message. If the content type is email, this is the message text without HTML tags and AMP content. | ||

| preheader | STRING (Nullable). "Also known as "preview text", this is the block block of text that users see next to, or underneath, the subject line in their inbox. | ||

| url | STRING (Nullable). If the delivery is an outgoing webhook, this is the URL of the webhook. | ||

| method | STRING (Nullable). If the delivery is an outgoing webhook, this is the HTTP method used—POST, PUT, GET, etc. | ||

| headers | STRING (Nullable). If the delivery is an outgoing webhook, these are the headers included with the webhook. |

Metrics exports detail events relating to deliveries (e.g. messages sent, opened, etc). Your initial metrics export contains baseline historical data, broken up into files with two sequence numbers, as follows:

<name>_v4_<workspace_id>_<sequence1>_sequence2>.

Subsequent files contain rows for data that changed since the last export.

| Field Name | Primary Key | Foreign Key | Description |

|---|---|---|---|

| event_id | ✅ | STRING (Required). The unique ID of the metric event. This can be useful for deduplicating purposes. | |

| workspace_id | INTEGER (Required). The ID of the Customer.io workspace associated with the metric record. | ||

| delivery_id | Deliveries | STRING (Required). The ID of the delivery record. | |

| metric | STRING (Required). The type of metric (e.g. sent, delivered, opened, clicked). | ||

| reason | STRING (Nullable). For certain metrics (e.g. attempted), the reason behind the action. | ||

| link_id | INTEGER (Nullable). For "clicked" metrics, the unique ID of the link being clicked. | ||

| link_url | STRING (Nullable). For "clicked" metrics, the URL of the clicked link. (Truncated to 1000 bytes.) | ||

| created_at | TIMESTAMP (Required). The timestamp the metric was created at. | ||

| seq_num | INTEGER (Required) A monotonically increasing number indicating relative recency for each record: the larger the number, the more recent the record. |

Outputs are the unique steps within each workflow journey. The first outputs file includes historical data. Subsequent files contain rows for data that changed since the last export.

Upgrade to v4 to use subjects and outputs

We’ve made some minor changes to subjects and outputs a part of our v4 release. If you’re using a previous schema version, we disabled your subjects and outputs on October 31st, 2022. You need to upgrade to schema version 4, to continue syncing outputs and subjects data.

| Field Name | Primary Key | Foreign Key | Description |

|---|---|---|---|

| workspace_id | INTEGER (Required). The ID of the Customer.io workspace associated with the output record. | ||

| output_id | ✅ | STRING (Required). The ID for the step of the unique path a person went through in a Campaign or API Triggered Broadcast workflow. | |

| subject_name | Subjects | STRING (Required). A secondary unique ID for the path a person took through a campaign or broadcast workflow. | |

| output_type | STRING (Required). The type of step a person went through in a Campaign or API Triggered Broadcast workflow. Note that the "delay" output_type covers many use cases: a Time Delay or Time Window workflow item, a "grace period", or a Date-based campaign trigger. | ||

| action_id | INTEGER (Required). The ID for the unique workflow item associated with the output. | ||

| explanation | STRING (Required). The explanation for the output. | ||

| delivery_id | Deliveries | STRING (Nullable). If a delivery resulted from this step of the workflow, this is the ID of that delivery. | |

| draft | BOOLEAN (Nullable). If a delivery resulted from this step of the workflow, this indicates whether the delivery was created as a draft. | ||

| link_tracked | BOOLEAN (Nullable). If a delivery resulted from this step of the workflow, this indicates whether links within the delivery are configured for tracking. | ||

| split_test_index | INTEGER (Nullable). If the step of the workflow was a Split Test, this indicates the variant of the Split Test. | ||

| delay_ends_at | TIMESTAMP (Nullable). If the step of the workflow involves a delay, this is the timestamp for when the delay will end. | ||

| branch_index | INTEGER (Nullable). If the step of the workflow was a T/F Branch, a Multi-Split Branch, or a Random Cohort Branch, this indicates the branch that was followed. | ||

| manual_segment_id | INTEGER (Nullable). If the step of the workflow was a Manual Segment Update, this is the ID of the Manual Segment involved. | ||

| add_to_manual_segment | BOOLEAN (Nullable). If the step of the workflow was a Manual Segment Update, this indicates whether a person was added or removed from the Manual Segment involved. | ||

| created_at | TIMESTAMP (Required). The timestamp the output was created at. | ||

| seq_num | INTEGER (Required) A monotonically increasing number indicating relative recency for each record: the larger the number, the more recent the record. |

The first People export file includes a list of current people at the time of your first sync (deleted or suppressed people are not included in the first file). Subsequent exports include people who were created, deleted, or suppressed since the last export.

People exports come in two different files:

people_v4_<env>_<seq>.parquet: Contains new people.people_v4_chngs_<env>_<seq>.parquet: Contains changes to people since the previous sync.

These files have an identical structure and a part of the same data set. You should import them to the same table.

| Field Name | Primary Key | Foreign Key | Description |

|---|---|---|---|

| workspace_id | INTEGER (Required). The ID of the Customer.io workspace associated with the person. | ||

| customer_id | STRING (Required). The ID of the person in question. This will match the ID you see in the Customer.io UI. | ||

| internal_customer_id | ✅ | STRING (Required). The cio_id of the person in question. Use the people parquet file to resolve this ID to an external customer_id or email address. | |

| deleted | BOOLEAN (Nullable). This indicates whether the person has been deleted. | ||

| suppressed | BOOLEAN (Nullable). This indicates whether the person has been suppressed. | ||

| created_at | TIMESTAMP (Required). The timestamp of the most recent change to the id attribute (this is typically when the id is first set). If the id attribute doesn’t exist (the person is identified only by their email address), this value is the last time the profile changed. | ||

| updated_at | TIMESTAMP (Required) The date-time when a person was updated. Use the most recent updated_at value for a customer_id to disambiguate between multiple records. | ||

| email_addr | STRING (Optional) The email address of the person. For workspaces using email as a unique identifier, this value may be the same as the customer_id. |

Subjects are the unique workflow journeys that people take through Campaigns and API Triggered Broadcasts. The first subjects export file includes baseline historical data. Subsequent files contain rows for data that changed since the last export.

Upgrade to v4 to use subjects and outputs

We’ve made some minor changes to subjects and outputs a part of our v4 release. If you’re using a previous schema version, we disabled your subjects and outputs on October 31st, 2022. You need to upgrade to schema version 4, to continue syncing outputs and subjects data.

| Field Name | Primary Key | Foreign Key | Description |

|---|---|---|---|

| workspace_id | INTEGER (Required). The ID of the Customer.io workspace associated with the subject record. | ||

| subject_name | ✅ | STRING (Required). A unique ID for the path a person took through a campaign or broadcast workflow. | |

| internal_customer_id | People | STRING (Nullable). The cio_id of the person in question. Use the people parquet file to resolve this ID to an external customer_id or email address. | |

| campaign_type | STRING (Required). The type of Campaign (segment, event, or triggered_broadcast) | ||

| campaign_id | INTEGER (Required). The ID of the Campaign or API Triggered Broadcast. | ||

| event_id | Metrics | STRING (Nullable). The ID for the unique event that triggered the workflow. | |

| trigger_id | INTEGER (Optional). If the delivery was created as part of an API Triggered Broadcast, this is the unique trigger ID associated with the API call that triggered the broadcast. | ||

| started_campaign_at | TIMESTAMP (Required). The timestamp when the person first matched the campaign trigger. For event-triggered campaigns, this is the timestamp of the trigger event. For segment-triggered campaigns, this is the time the user entered the segment. | ||

| created_at | TIMESTAMP (Required). The timestamp the subject was created at. | ||

| seq_num | INTEGER (Required) A monotonically increasing number indicating relative recency for each record: the larger the number, the more recent the record. |

Attribute exports represent changes to people (by way of their attribute values) over time. The initial Attributes export includes a list of profiles and their current attributes. Subsequent files contain attribute changes, with one change per row.

| Field Name | Primary Key | Foreign Key | Description |

|---|---|---|---|

| workspace_id | INTEGER (Required). The ID of the Customer.io workspace associated with the person. | ||

| internal_customer_id | ✅ | STRING (Required). The cio_id of the person in question. Use the people parquet file to resolve this ID to an external customer_id or email address. | |

| attribute_name | STRING (Required). The attribute that was updated. | ||

| attribute_value | STRING (Required). The new value of the attribute. | ||

| timestamp | TIMESTAMP (Required). The timestamp of the attribute update. |

Troubleshooting

I get a 403 error in Customer.io

This means that your Shared Access Signature URL doesn’t grant Customer.io permission to access your Azure blob store. Make sure that your Access policy or Shared access signature grant read, add, create, write, and list permissions. If not, you may need to edit your Access policy or generate a new SAS URL and paste it into Customer.io to fix the issue.

I can’t extend my SAS URL’s expiry date

By default, you Microsoft Azure doesn’t allow a Shared Access Signature (SAS) more than 1 week or 365 days in the future, depending on the signing method you use. You must create an Access policy that allows you to create tokens with a longer expiration period.

In general, we suggest that you create an Access policy as a way to both extend the life of your SAS token and as a method for revoking your SAS token later if you decide to turn off this integration.

To create an Access policy, right click your blob container in Microsoft Azure and go to Access policy. Add a policy with the same permissions as your SAS token (read, add, create, write, and list permissions), and set an expiry date in the future.